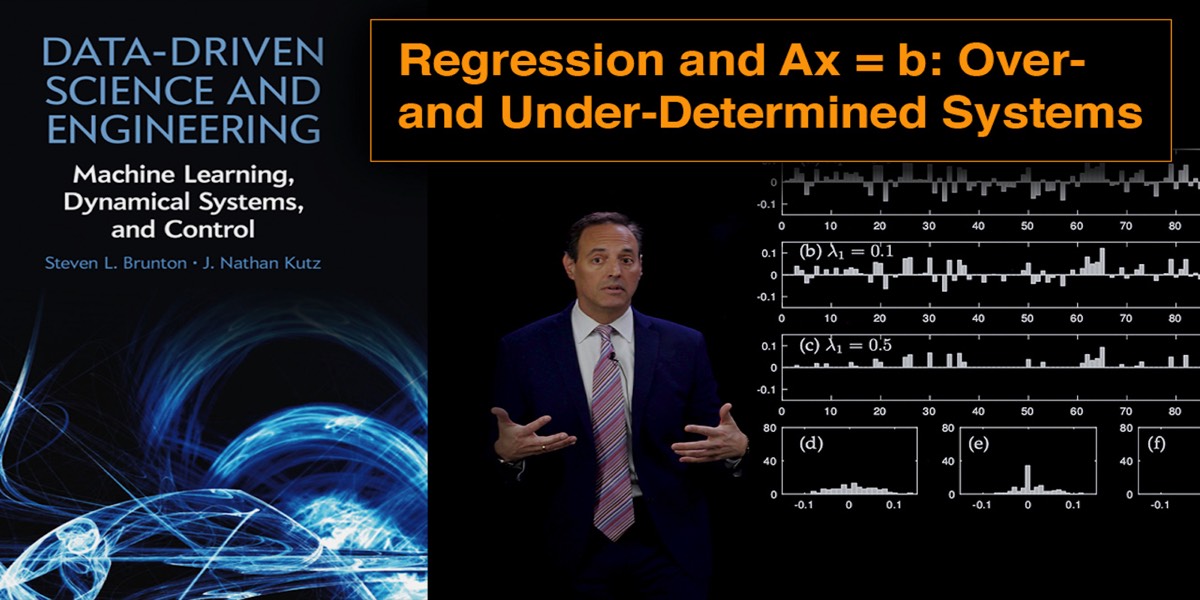

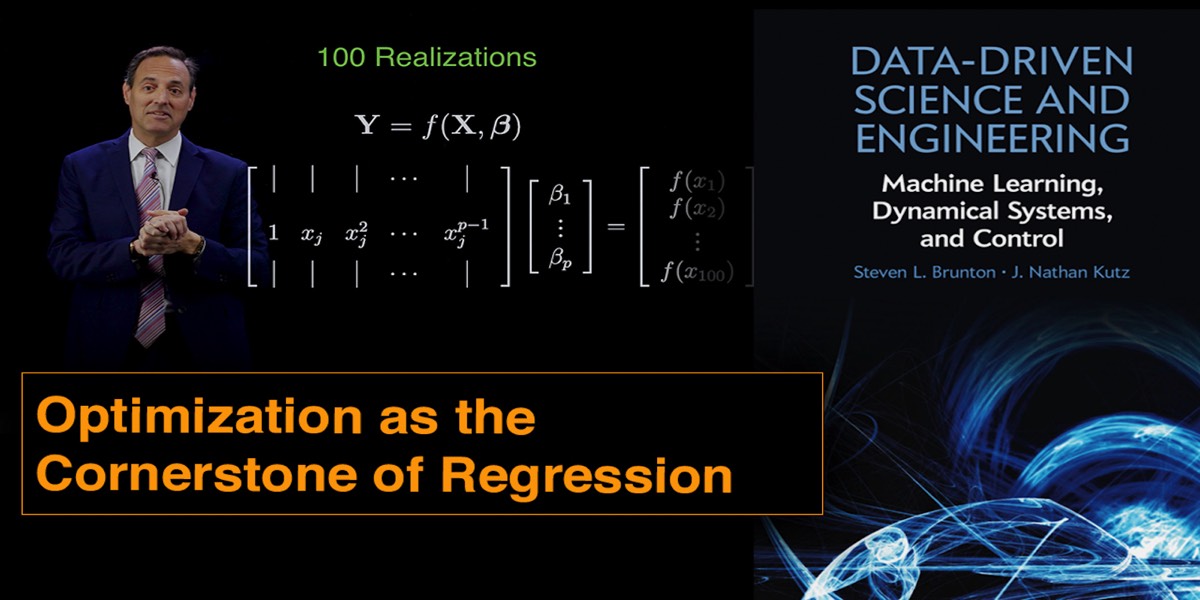

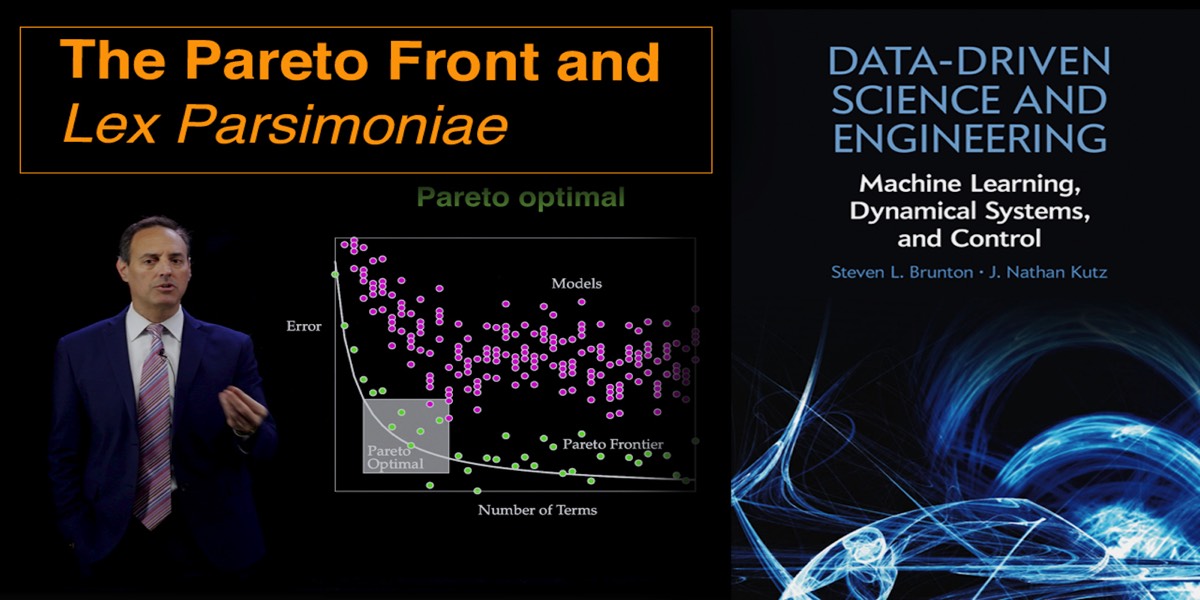

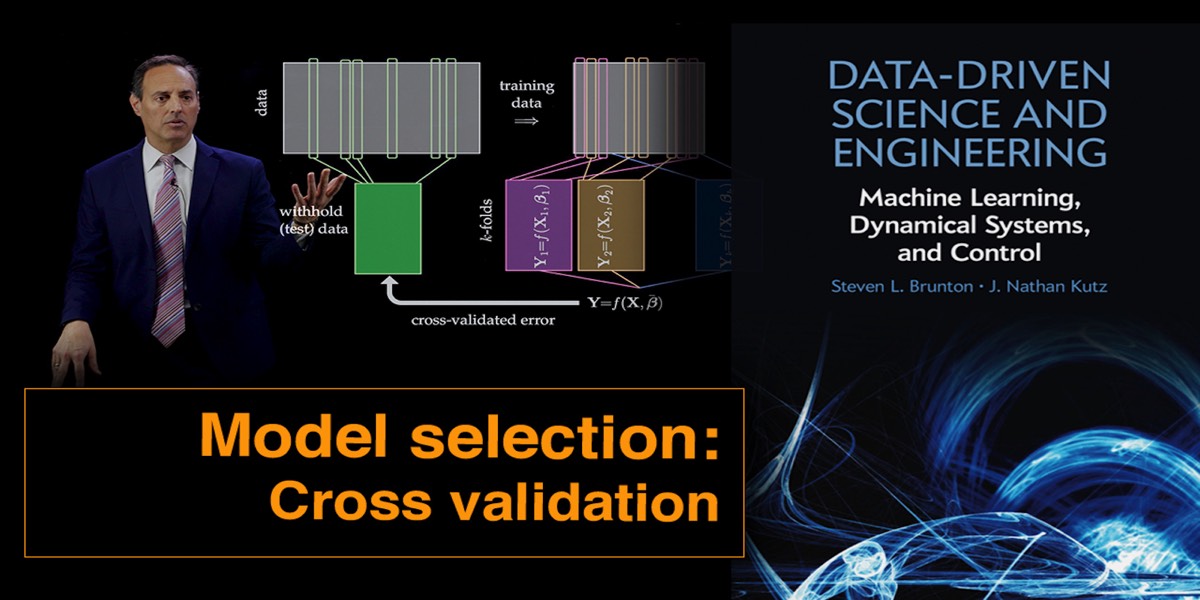

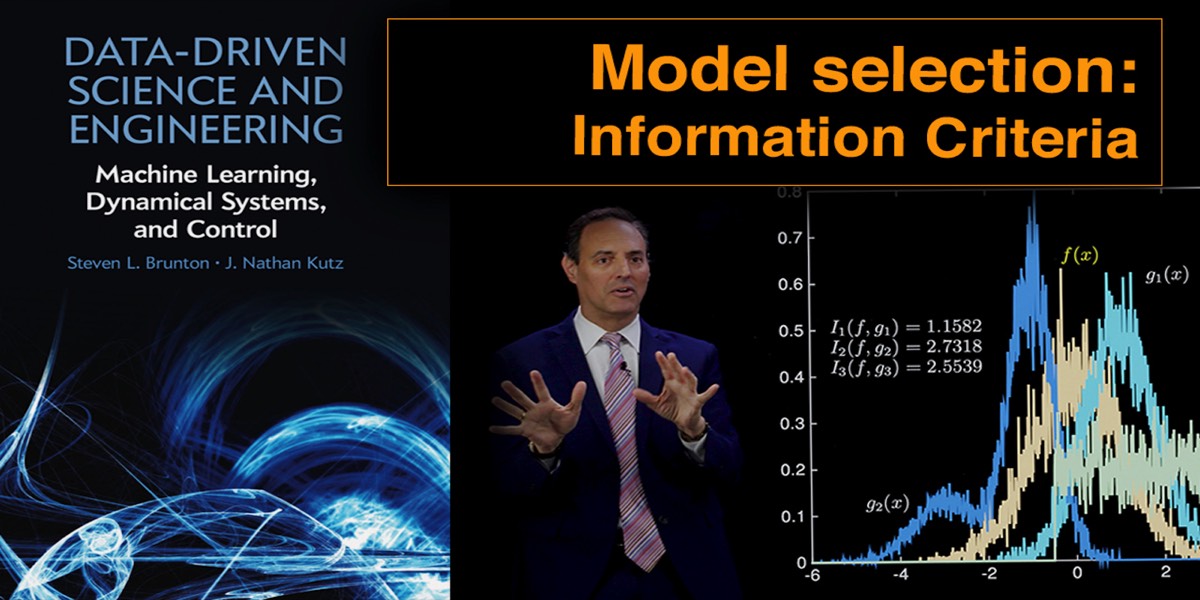

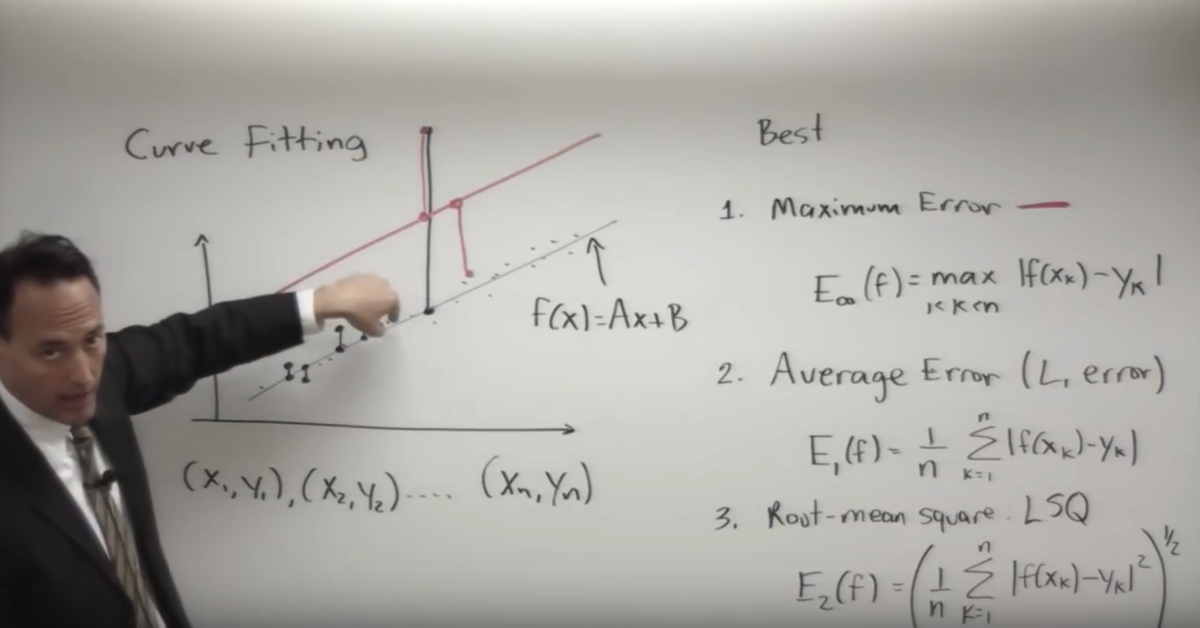

All of machine learning revolves around optimization. This includes regression and model selection frameworks that aim to provide parsimonious and interpretable models for data. Curve fitting is the most basic of regression techniques, with polynomial and exponential fitting resulting in solutions that come from solving linear systems of equations. This can be generalized for fitting of nonlinear systems. Importantly, regression are typically applied to under- or over-determined systems, thus requiring cross-validation strategies to evaluate the results.

Supplementary Videos

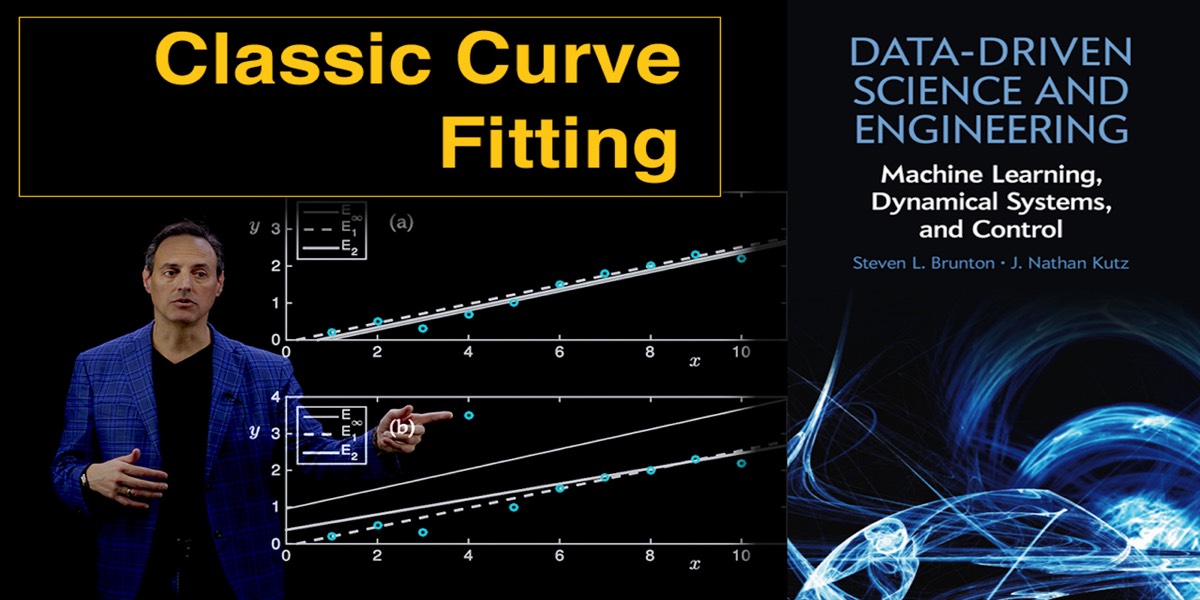

This video highlights some of the basic ideas of least-square regressions, and especially polynomial fitting to data [ View ]

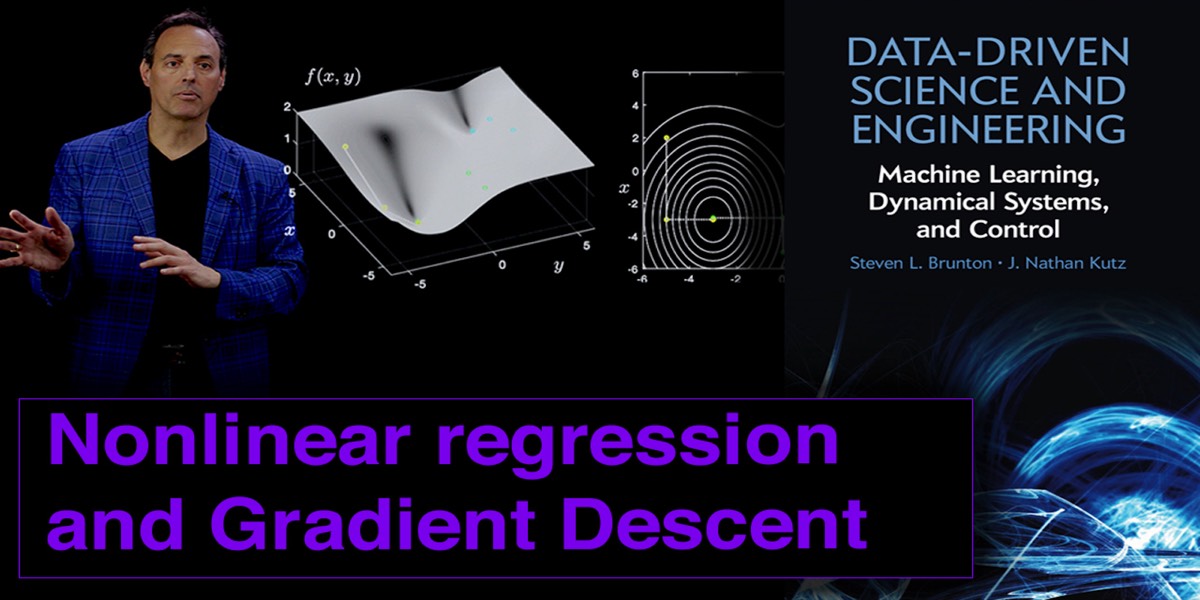

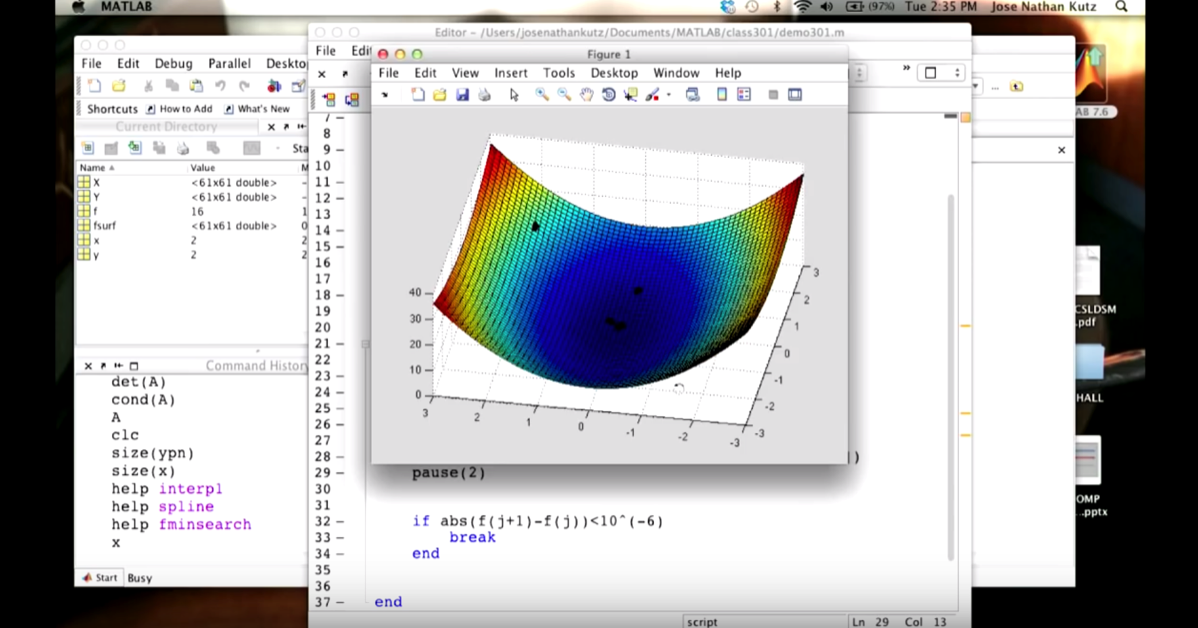

This video highlights how to develop and implement a gradient descent algorithm [ View ]